A $6 million AI project has just knocked ChatGPT off its throne as the #1 iPhone app and caused NVIDIA to lose $400 billion in market value. DeepSeek is an impressive chatbot that has been creating excitement globally. If you’re curious and want to test it, PockectPal AI lets you run various LLMs including Deepseek AI locally on Android and iOS. Besides, we would check easy ways to install DeepSeek R1 on Windows, Linux, or macOS machines.

DeepSeek stands out from other AI tools by running completely offline. This means your data remains private, and you get faster responses. Running DeepSeek AI locally allows you to personalize and control your AI experience without relying on a cloud-based server. Whether you’re using an Android device, iOS, Windows, or macOS, setting up DeepSeek AI can be straightforward and convenient. Let’s dive into the step-by-step guides for each platform.

What is DeepSeek AI and Why Install Locally

DeepSeek AI launched in 2023 as an open-source artificial intelligence tool that handles everything from code completion to document generation. The company built this powerful AI model for under $6 million, which makes it much cheaper than competitors who invested hundreds of millions in similar technologies.

DeepSeek models show impressive results in various tests. They scored 5.5 out of 6 in performance tests, beating OpenAI’s o1 model and ChatGPT-4o. These results came with much lower running costs.

DeepSeek’s technical breakthroughs make it efficient:

- Inference-time compute scaling changes effort based on task complexity

- Load-bearing strategy stops expert overload through dynamic changes

- PTX programming optimization runs GPU instructions better

The model handles different tasks in special ways:

- Immediate updates and a strong conversational interface

- Advanced reasoning capabilities

- Strong support for technical tasks and coding

- Quick multilingual processing

Larger models like DeepSeek-R1 work best with GPU-based instances such as ml.p5e.48xlarge. Different models can handle different amounts of text, with DeepSeek V3 processing up to 66,000 tokens.

Key Features

DeepSeek’s remarkable capabilities and availability set it apart from others. The platform has model versions with parameters from 1.5B to 70B so that users can choose based on their computing needs. Here’s what makes DeepSeek stand out:

- Advanced reasoning and problem-solving capabilities

- Code completion and debugging assistance

- Document generation and processing

- User-friendly interface for both technical and non-technical users

- Complete offline functionality

- No subscription fees or hidden costs

DeepSeek runs smoothly on consumer hardware and needs minimal infrastructure compared to other AI models. The platform works well on modern GPUs because it’s optimized for local deployment, making it perfect for users with standard high-performance hardware.

Privacy Advantages of Local Installation

Running DeepSeek locally gives you substantial privacy benefits. Your device or server handles all data processing, so you don’t need to send sensitive information to external servers. Local servers running DeepSeek protect your data completely, with these key benefits:

- Lower operational costs after initial setup

- You retain control over configurations and updates

- Better data security with no external exposure

- Reduced latency due to local processing

- Customizable hardware optimization

Choosing the Right DeepSeek Model to Run Locally

DeepSeek R1 offers several LLM models ranging from 1.5B to 70B. To run DeepSeek R1 locally on a PC, Mac, or Linux system, your computer needs at least 8GB of RAM. This is sufficient to efficiently operate the smaller DeepSeek R1 1.5B model, achieving output speeds of about 13 tokens per second.

As for smartphones, it’s recommended that they have at least 6GB of memory to run the DeepSeek R1 model smoothly. Keep in mind that smartphones must allocate RAM for the OS, other apps, and GPU, necessitating at least a 50% buffer to avoid slowing down your phone by moving the model into swap space.

DeepSeek offers several model versions, each built to work best for specific tasks and computing power. Its main model uses a Mixture-of-Experts (MoE) system with 671 billion parameters, but it only uses 37 billion for each task. This state-of-the-art approach helps us use resources better without losing any power.

The distilled model variants include:

- DeepSeek R1-Distill-Qwen ranging from 1.5B to 32B parameters

- DeepSeek R1-Distill-Llama available in 8B and 70B configurations

Model size affects how much storage you need. The 1.5B model takes up about 2.3GB of storage, while the 70B model needs over 40GB. These options work in hardware of all types, from personal computers to enterprise servers.

Each DeepSeek version needs specific hardware to run at its best. Here’s what different model sizes need:

| Model Variant | VRAM Requirement | Recommended GPU |

|---|---|---|

| R1-Distill-Qwen-1.5B | 0.7 GB | RTX 3060 8GB |

| R1-Distill-Qwen-7B | 3.3 GB | RTX 3070 10GB |

| R1-Distill-Llama-8B | 3.7 GB | RTX 3070 10GB |

| R1-Distill-Qwen-14B | 6.5 GB | RTX 3080 12GB |

| R1-Distill-Qwen-32B | 14.9 GB | RTX 4090 24GB |

| R1-Distill-Llama-70B | 32.7 GB | RTX 4090 24GB (x2) |

Installing DeepSeek on Android and iOS Locally

Local installation options work well for specific cases or tech-savvy users with the right hardware. Most Android users will find the Play Store version the most stable and easiest to use. The official method and alternative approaches each have benefits that depend on your device’s capabilities and priorities.

The official app keeps your data encrypted during transfer and comes with complete features that make interaction quick. The DeepSeek AI Assistant app from the Play Store or App Store gives you an uninterrupted experience with the DeepSeek-V3 model with over 600B parameters. The app works best on modern Android devices and you just need Android 5.0 or higher to run it smoothly.

Being Chinese in origin DeepSeek reports user data back to China which might cause serious privacy concerns to a majority of users. Fortunately, apps like Private AI, PocketPal AI, Llamao, and LM Playground let you run local AI models on Android devices and iPhones locally. In this guide, we’ll use PocketPal AI to run DeepSeek R1 on Android and iOS.

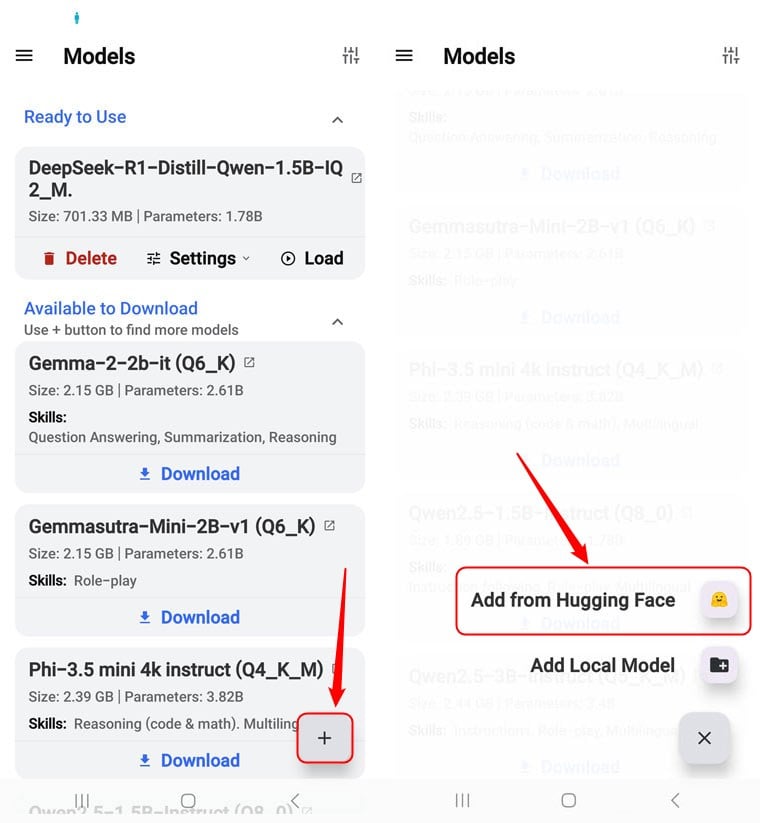

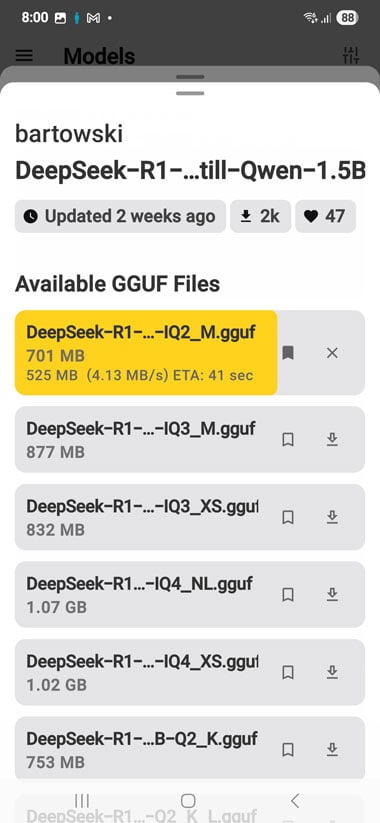

- Download and install the PocketPal AI app on your phone.

- Open the app and tap the Go to Models option on the bottom.

- Then tap the ‘+‘ icon and select Add from Hugging Face.

- You’ll now have a big list of all AI LLMs available for local download. Tap the Search box on the bottom and type DeepSeek. Look for the DeepSeek R1 LLM suitable for your phone’s hardware and download it. In my case, I downloaded ‘DeepSeek-R1-Distill-Qwen-1.5B’ by Bartowski.

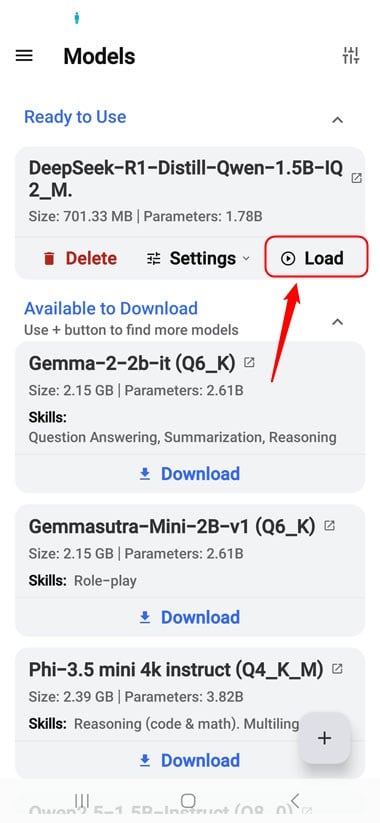

- When the download finishes, go back to the main screen in PocketPal, select Go to Models, and tap the Load option.

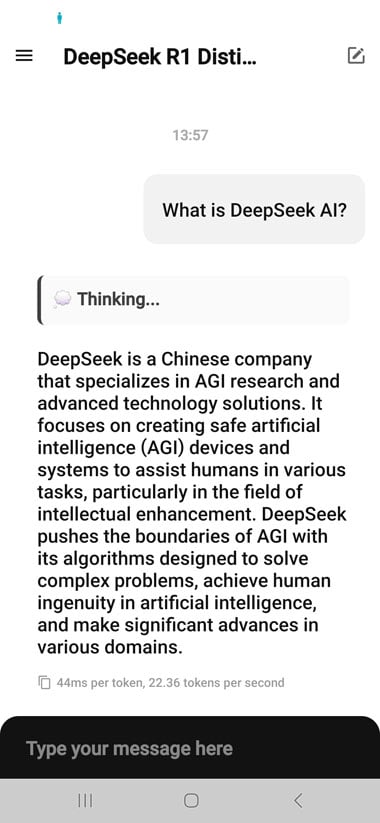

- That’s it! You’re all set to chat with DeepSeek AI and receive quick responses.

Running DeepSeek AI Locally on a Computer

DeepSeek AI works on Windows through several installation methods. Your hardware capabilities and specific needs will determine the best path. Here’s what you need to know about the requirements and how to install it.

Using LM Studio on Windows. Mac, and Linux

LM Studio lets you run different DeepSeek models offline. The tool manages resource allocation and model loading automatically. This makes it perfect if you want a simple setup process.

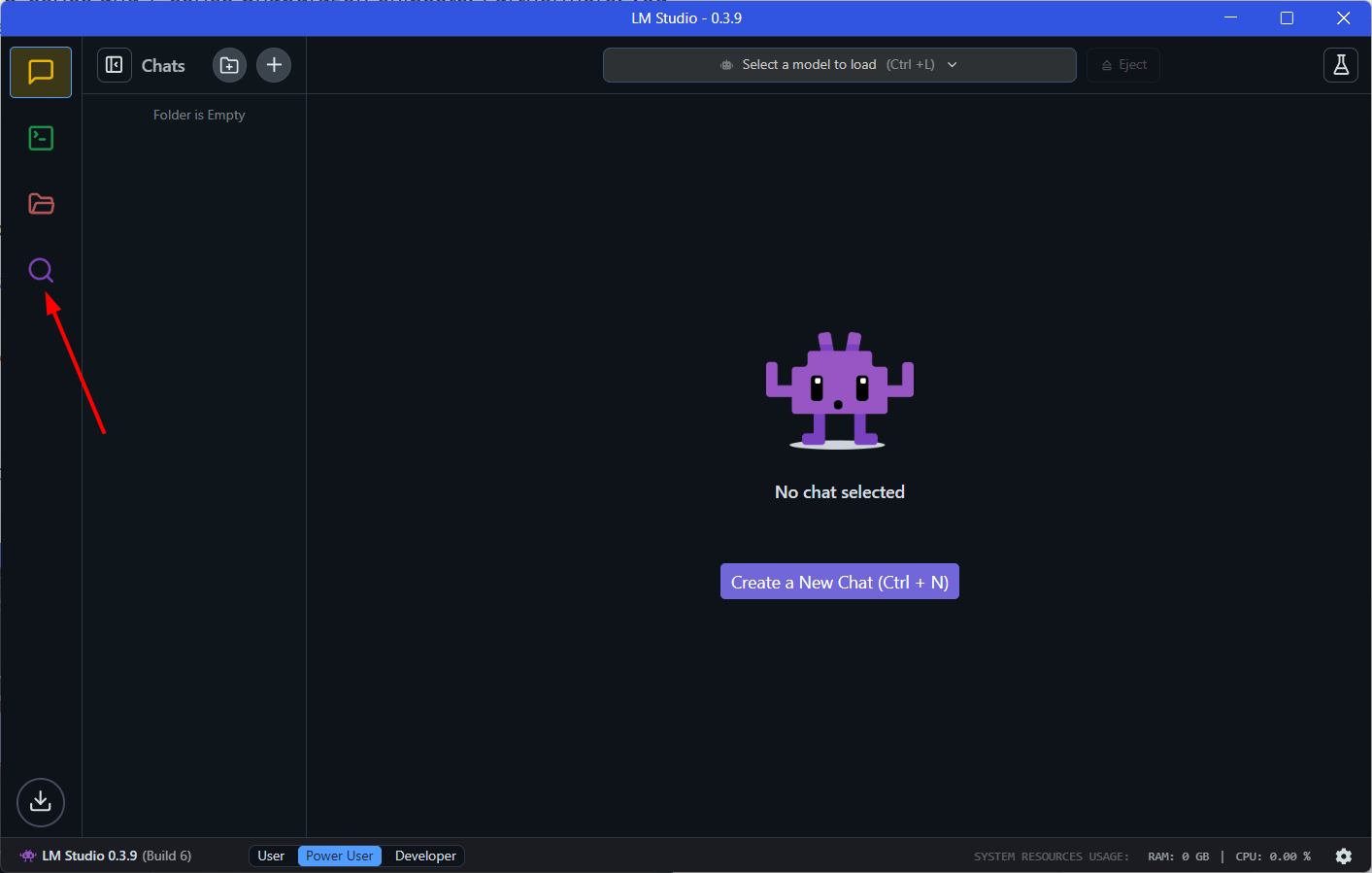

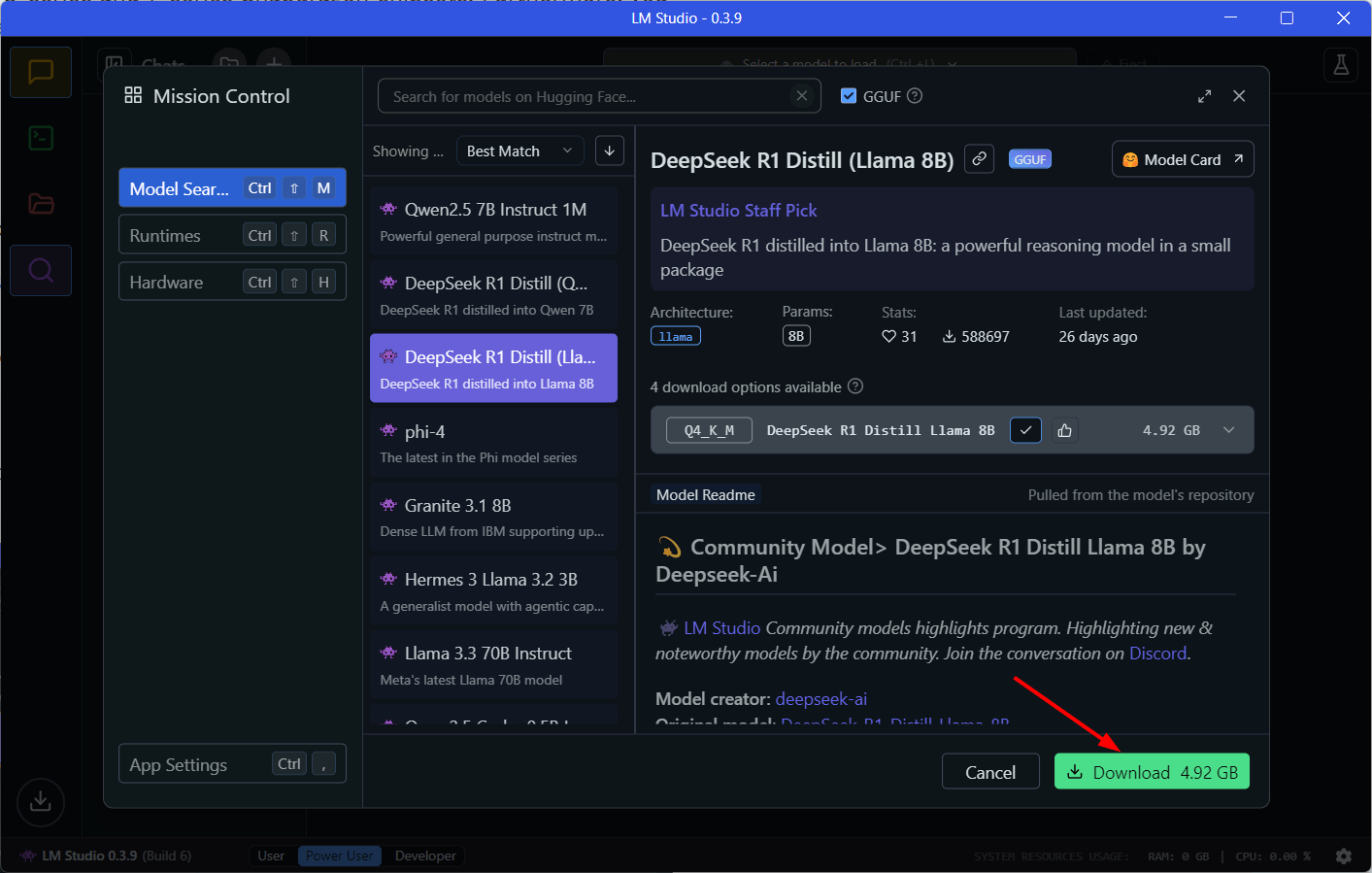

- Download LM Studio for Windows, Mac, or Linux from the official website.

- Install the software following the on-screen prompts.

- Click the Search/Discover icon on the left-hand pane and select the model that best suits your computer’s hardware configuration. I chose DeepSeek R1 Distill (Llama 8B).

- Click the Download button.

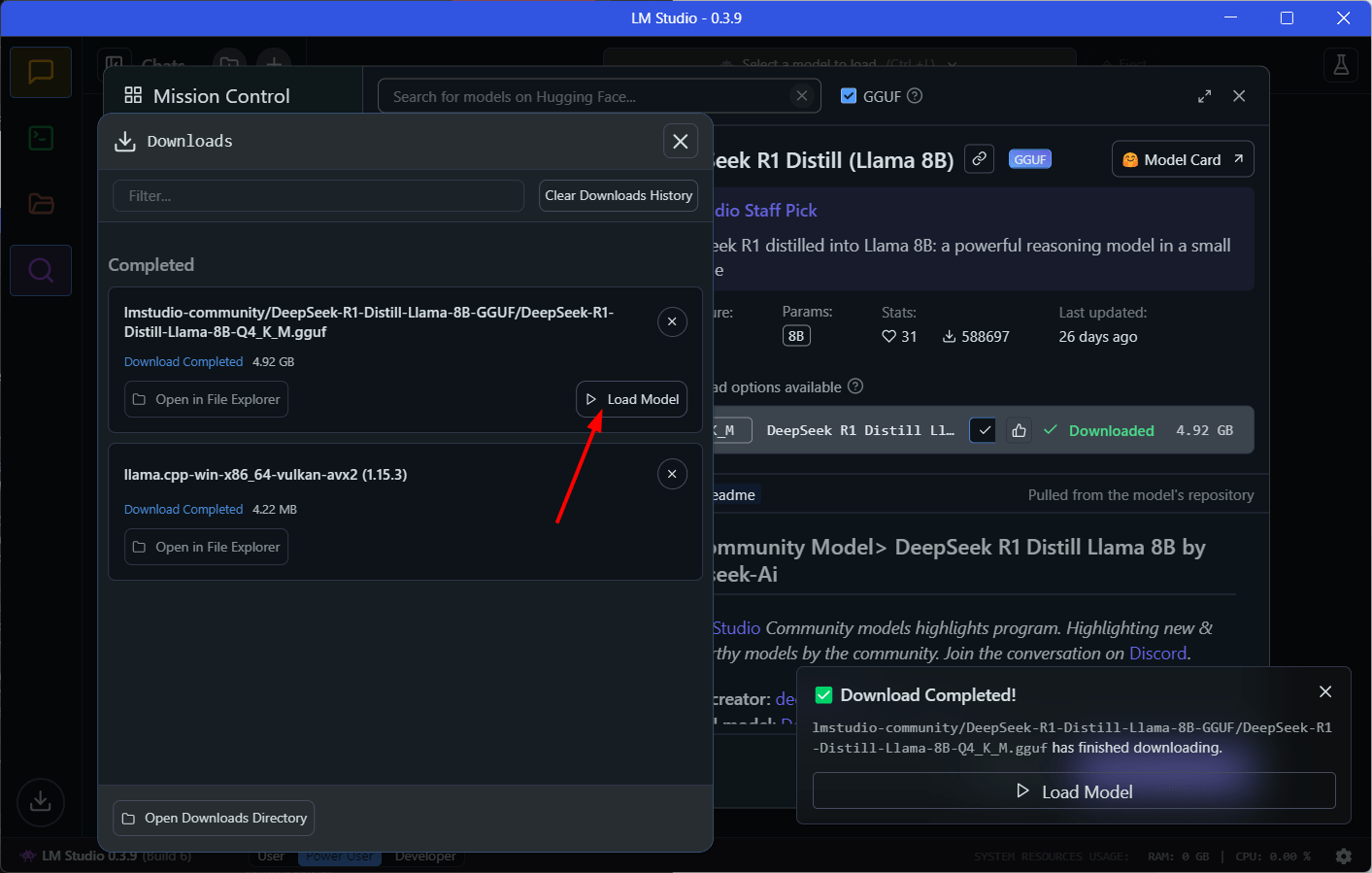

- Wait for the download to finish and then click the Load option.

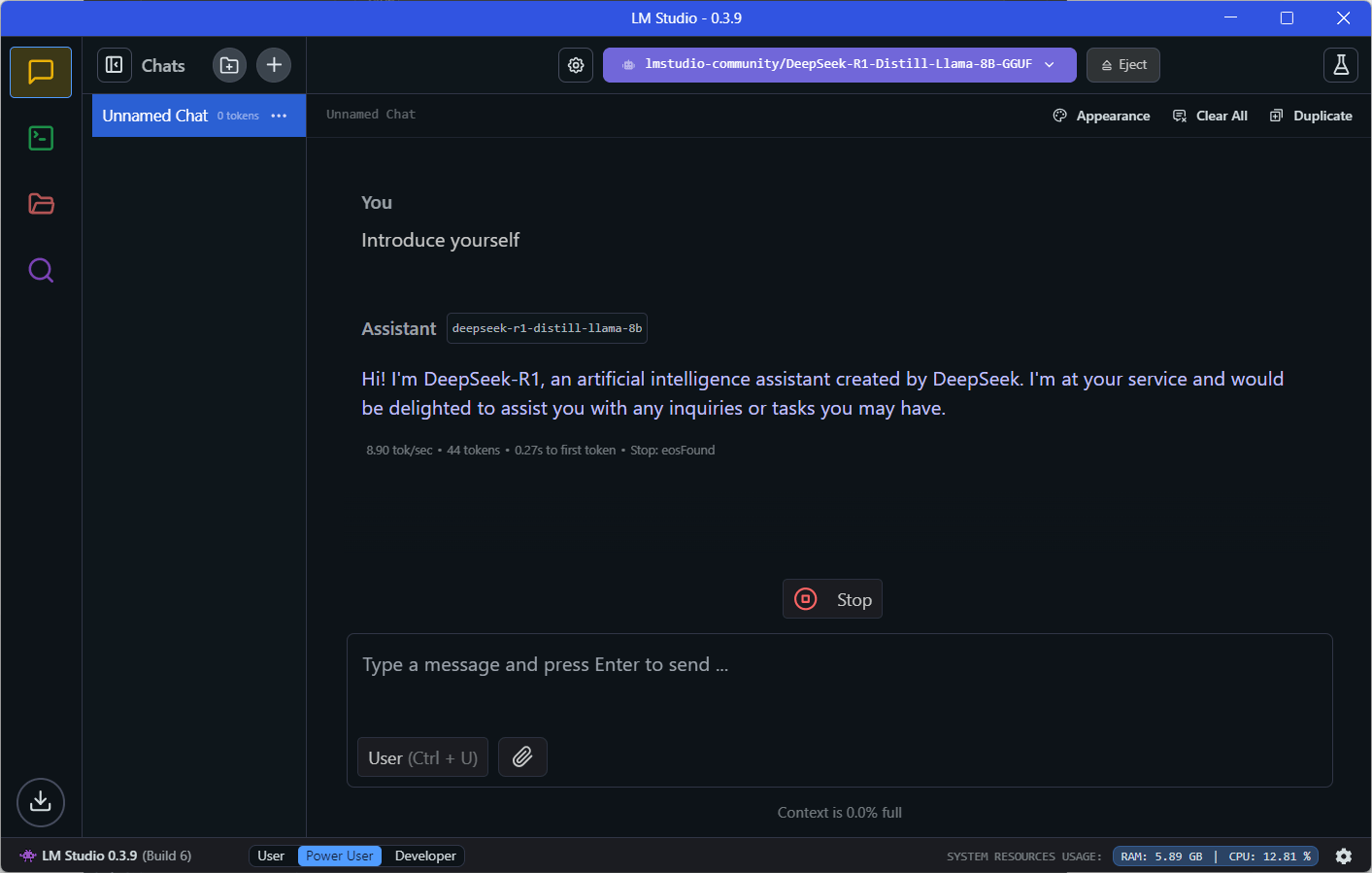

- Now you can chat with DeepSeek AI locally or offline.

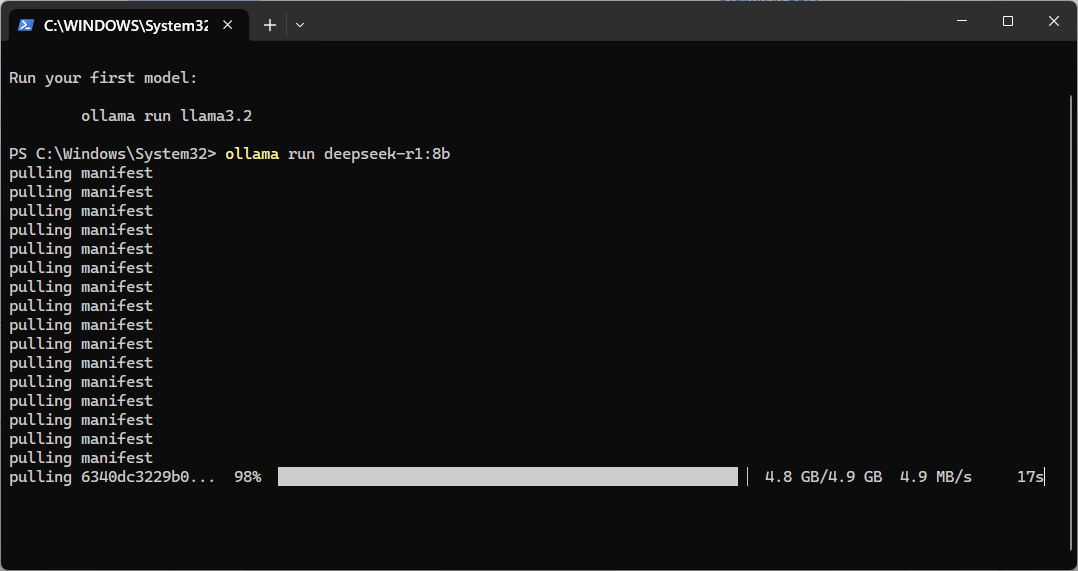

Using Ollama on Windows/Mac/Linux

Ollama gives you another reliable way to run Llama 3.3, DeepSeek-R1, Phi-4, Mistral, Gemma 2, and other models, locally. Follow the steps below to install DeepSeek.

- Download the latest Ollama Setup from the official website.

- When the Ollama Setup file is downloaded, double-click to install it.

- When the installation finishes, Ollama will launch a Terminal window.

- To download an LLM, which is DeepSeek R1 in the current scenario, execute one of the following commands depending on your computer’s memory to install 1.5B, 7B, 8B, 14B, 32B, or 70B model. The 1.5B parameter model runs well on most systems if you have limited hardware. Users with better hardware specifications can choose larger models up to 70B parameters.

ollama run deepseek-r1:1.5b

ollama run deepseek-r1:7b

ollama run deepseek-r1:8b

ollama run deepseek-r1:14b

ollama run deepseek-r1:32b

ollama run deepseek-r1:7b

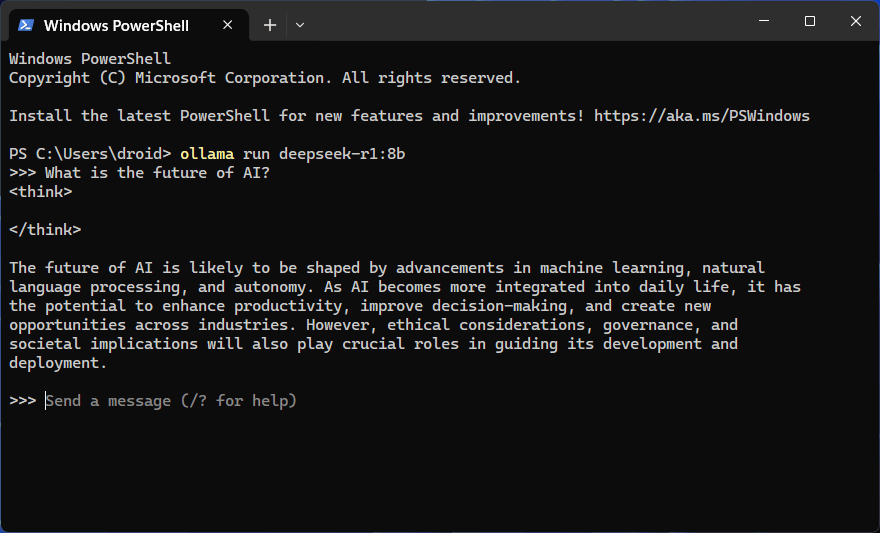

- Wait until the download is complete. You can now chat with DeepSeek by typing your query after the ‘Send a message‘ prompt.

- Please note that Ollama doesn’t have a GUI. To chat with DeepSeek or a language model next time, run the following command in the command window.

ollama run deepseek-r1:8b

Now you know how to run DeepSeek AI and other LLMs locally using apps like PocketPal AI, Ollama, and LM Studio. If you know a better app to harness the power of AI offline, please let us know.

FAQs

Q1. What are the system requirements for running DeepSeek AI locally?

The requirements vary depending on the model size, but generally, you’ll need at least 8GB of RAM, 10GB of free storage space, and a modern GPU. For optimal performance with larger models, 32GB of RAM and an NVIDIA GPU with 16GB VRAM are recommended.

Q2. Can I use DeepSeek AI offline on my mobile device?

Yes, you can use DeepSeek AI offline on mobile devices through local installation methods. However, this requires more storage space and processing power compared to the cloud-based official app. The official app from the App Store or Play Store typically requires an internet connection for optimal functionality.

Q3. How does DeepSeek AI compare to other AI models in terms of performance and cost?

DeepSeek AI has demonstrated impressive performance, scoring 5.5 out of 6 in benchmarks, outperforming some competitors. It achieved these results at a significantly lower cost, with development expenses of only $5.60 million compared to $80-100 million for similar projects by other companies.

Q4. What are the privacy advantages of installing DeepSeek AI locally?

Installing DeepSeek AI locally offers enhanced data privacy as all processing occurs on your device or server. This eliminates the need to send sensitive information to external servers, provides full control over configurations and updates, and helps comply with strict data protection regulations.

Q5. How do I choose the right DeepSeek model for my needs?

Choosing the right DeepSeek model depends on your hardware capabilities and performance requirements. Models range from 1.5B to 70B parameters, with corresponding VRAM requirements from 0.7GB to 32.7 GB. Consider your available GPU, storage space, and the complexity of tasks you plan to perform when selecting a model.